The quality and style of the images you generate with Stable Diffusion is completely dependent on what model you use.

StabilityAI released the first public model, Stable Diffusion v1.4, in August 2022. In the coming months, they released v1.5, v2.0 & v2.1.

Soon after these models were released, users started to create their own custom models on top of the base models.

Today, most custom models are built on top of either SD v1.5 or SD v2.1. These custom models usually perform better than the base models.

"Stable Diffusion model" is used to refer to the official base models by StabilityAI, as well as all of these custom models.

This is Part 2 of the Stable Diffusion for Beginner's series.

Part 1: Getting Started: Overview and Installation

Part 2: Stable Diffusion Prompts Guide

Part 3: Stable Diffusion Settings Guide

Part 3: Models

Part 4: LoRAs

Part 5: Embeddings/Textual Inversions

Part 6: Inpainting

Part 7: Animation

Where to find Stable Diffusion models?

To keep up with the newest models, check Civitai.com and HuggingFace.co.

I prefer Civitai because users share many image examples, with prompts, for each model.

The model space is moving super fast: I wouldn't be surprised if a new model is created today and becomes the most popular model within a month. You can learn about Stable Diffusion developments in our weekly updates.

Note: you will have to have a Civitai account to view some of the models in this article, because many have NSFW capabilities.

HuggingFace is better if you have a model in mind and are just searching for it. Most of the time there will not be any image examples:

Stable Diffusion checkpoint models comes in two different formats: .ckpt and .safetensors. .safetensor files are preferable to .ckpt files because they have better security features.

You will either be running these models locally or in a Colab notebook (running in the cloud on Google's servers)

Installation instructions for running locally with AUTOMATIC1111:

Best Checkpoint Models

Dreamshaper

Great for: Fantasy Art, Illustration, Semirealism, Characters, Environments

Dreamshaper is a great model to start with because it's so versatile. It doesn't take much prompting out of the box to get good results, so it's great for beginners.

You can do a wide variety of subjects - characters, environments and animals in styles that span from very artistic to semirealism.

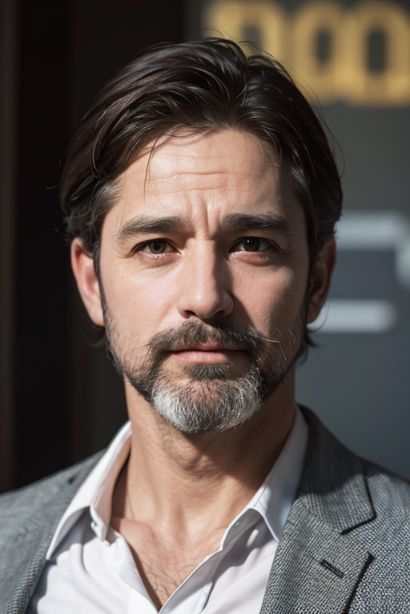

CyberRealistic

Great for: Photorealism, Real People

CyberRealistic is extremely versatile in the people it can generate. It's very responsive to adjustments in physical characteristics, clothing and environment. It is quite good at famous people.

We highly recommend you use lighting, camera and photography descriptors in your prompts.

We also recommend you use it with the textual inversion CyberRealistic Negative.

One of the model's key strengths lies in its ability to effectively process textual inversions and LORA, providing accurate and detailed outputs. Additionally, the model requires minimal prompts, making it incredibly user-friendly and accessible.

Cyberdelia, Model creator

majicMIX realistic

Great for: Photorealism, Women

majicMIX is a popular anime model: this is the realistic variant of that model.

The lighting, skin texture and photorealistic camera effects on this model are simply astounding.

The downside is it's nowhere near as versatile as other realistic models like CyberRealistic. Similar to other "beautiful women" models it produces a very similar face.

Realistic Vision

Great for: Photorealism, Real People

Photorealism model used for both celebrities and original characters.

Quite versatile in the types of people you can get.

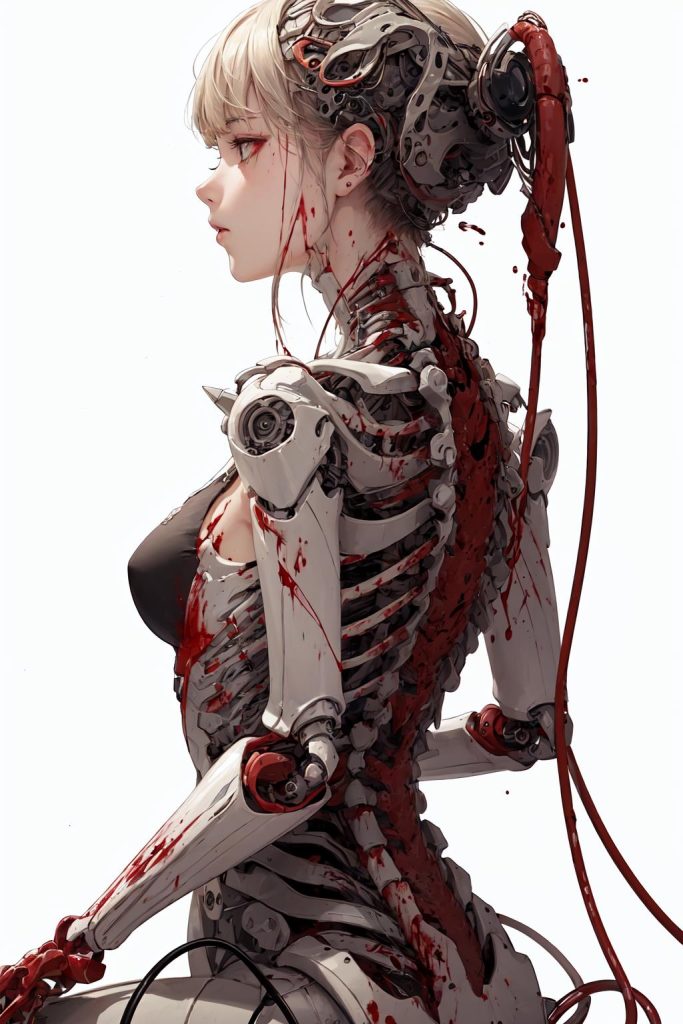

GhostMix

Great for: Anime, Semirealism

A very beautiful anime model that can veer into semirealism.

ReV Animated

Great for: 2.5D, Semirealisim, Illustration

ReV Animated is a highly versatile model with a great style. It's a mix of realism and anime, veering to the realism side. It's another beginner friendly model that requires minimal prompting to get great results.

ChilloutMix

Great for: Photorealism, Women

The most popular model of all time on Civitai. Images are high quality; good for producing the Kpop idol look, however not a very versatile model.

NeverEnding Dream

Great for: Fantasy Art, Illustration, Semirealism, Anime

NeverEnding Dream is intended as a complementary model to DreamShaper, to cover everything that DreamShaper is not good at.

It's intended for use with LoRAs, and can also generate anime.

Deliberate

Great for: Semirealism, Illustration, Fantasy Art

A "jack of all trades" model - you can try portraits, environments, fantasy etc. One of the most popular models of all time, however a bit outdated.

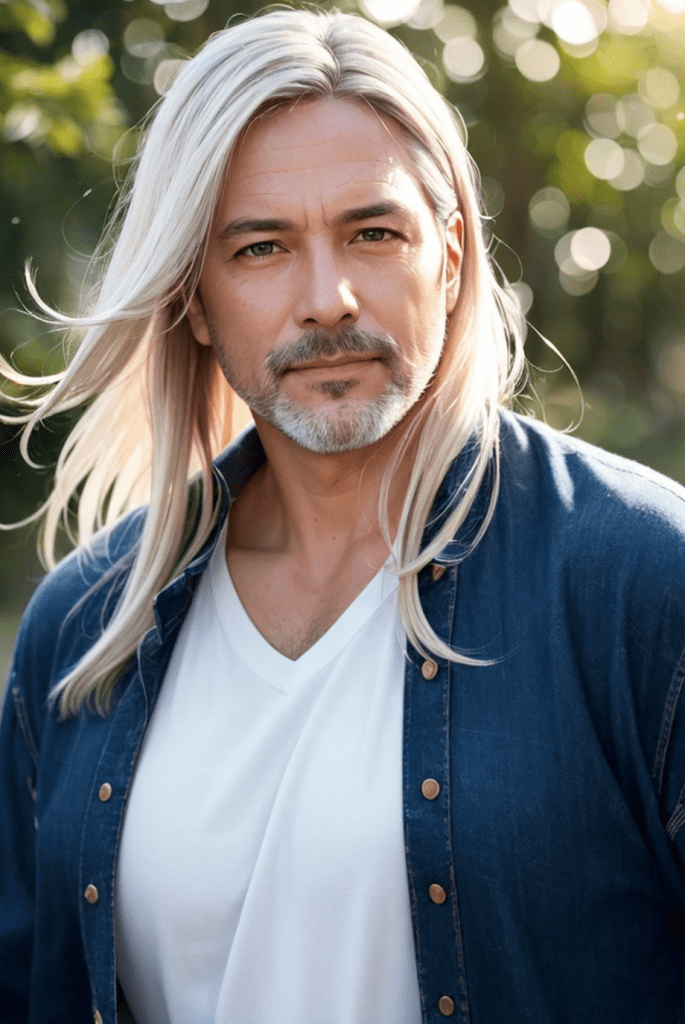

Henmix_Real

Great for: Realism

Good all-around realistic model. The creator's intention was to produce a model that would be good at all races.

Best Anime Models

Full comparison: The Best Stable Diffusion Models for Anime

Anime models can trace their origins to NAI Diffusion. NAI is a model created by the company NovelAI modifying the Stable Diffusion architecture and training method.

At the time of release (October 2022), it was a massive improvement over other anime models. Whilst the then popular Waifu Diffusion was trained on SD + 300k anime images, NAI was trained on millions.

Here's a NAI prompt guide and that is broadly applicable to most anime models.

Anything V5

Download Link • Model Information

Anything V3 was a wildly popular fine-tune of NAI Diffusion. V5 is the latest version.

AbyssOrangeMix3 (AOM3)

Download Link • Model Information

Latest version of AbyssOrangeMix, a series of mixes that produces a high-quality, artistic style.

Counterfeit

Download Link • Model Information

High quality anime model with a very artistic style. Created by gsdf, with DreamBooth + Merge Block Weights + Merge LoRA. Highly recommended.

MeinaMix

Download Link • Model Information

High quality anime model with a very artistic style. Created by gsdf, with DreamBooth + Merge Block Weights + Merge LoRA

Base Models

Nobody really uses the base models for generation anymore because the fine-tunes produce much better results. What the base models are useful for: training new models, training LoRAs.

Stable Diffusion v1.5

Stable Diffusion v1.5 was released in Oct 2022.

It produces slightly different results compared to v1.4. The general consensus is that it is slightly better, but the different is small.

v1.4 and 1.5 are the base for most of the models that people have created. It has a lot more celebrity and artist prompt recognition than 2.0

Stable Diffusion v2.1

v2.0 was created to solve what the creators saw as a potential for lawsuits by implementing a custom prompt interpreter that is able to filter out words they don't want you to use in prompts, purely for liability reasons (deep fake pron, etc).

v2.0 was very heavy-handed in it's filtering, and it became somewhat difficult to build good prompts for it.

v2.1 improves on the prompting and fixes a lot of what was missing from 2.0 in regard to celebrities and artists being missing. The filters were also improved to not overreact with false positives.

I would use 1.4 or 1.5 over 2.0 or 2.1 any day of the week.

Extras: Improve Your Results

Using LoRAs

You use LoRAs in combination with the above checkpoint models to add new subjects to your image. LoRAs can be trained on

For example, you could use an Studio Ghibli LoRA to make your outputs look more like the Studio Ghibli aesthetic.

Full Guide: Complete Guide to Stable Diffusion LoRAs

Using Textual Inversion / Embeddings

Embeddings (AKA Textual Inversion) are small files that contain additional concepts. You use them by downloading them and placing them in the folder stable-diffusion-webui/embeddings. You activate them by writing a special keyword in the prompt.

Negative Embeddings are files trained on bad quality images. By placing the activation keyword for these in your negative prompt, you'll get better quality images.

Some popular negative embeddings are EasyNegative and negative_hand.

List: Best Textual Inversion / Embeddings

FAQ

fp16 (floating point 16) and fp32 (floating point 32) refer to the format by which data is stored in the more, which can either be 16-bit (2 bytes), or 32-bit (4 bytes).

Unless you want to train or mix your own custom model, the smaller fp16 version is all you need. For casual users, the difference between the image quality produced by fp16 and fp32 is neglectable.

v1.5: support for NSFW, more custom models, better ControlNet support, more LoRA, TIs, have more artists and celebrities in the training data image set. Training set is 512x512, so optimal size for quick exploration is 512x512, which is kind of limited.

v2.1: Better support for photos, landscape. Training set is 768x768 so one get more interesting composition and detail, easier to explore and experiment starting at 768x768.