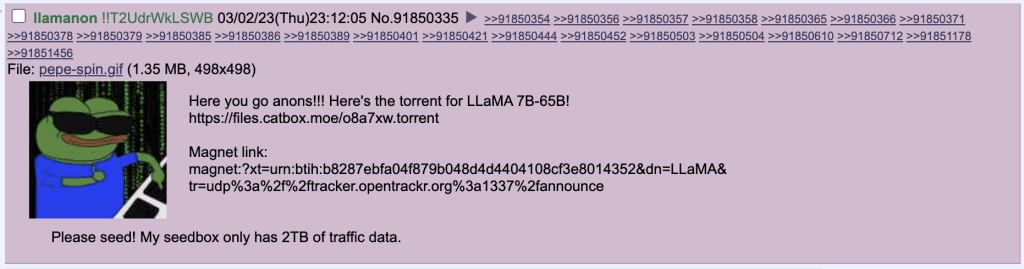

On March 3rd, user ‘llamanon’ leaked Meta’s LLaMA model on 4chan’s technology board /g/, enabling anybody to torrent it. A troll attempted to add the torrent link to Meta’s official LLaMA Github repo.

Here's how to set up LLaMA on a Mac with Apple Silicon chip.

Windows guide here.

Open your Terminal and enter these commands one by one:

git clone http://github.com/ggerganov/llama.cpp

cd llama.cpp

makeWe're only going to be downloading the 7B model in this tutorial:

In the models folder in llama.cpp, you should have the following file structure:

13B

30B

65B

7B

llama.sh

tokenizer.model

tokenizer_checklist.chk

pipenv shell --python 3.10You need to create a models/ folder in your llama.cpp directory that directly contains the 7B (or other models) and folders from the LLaMA model download.

Next, install the dependencies needed by the Python conversion script.

pip install torch numpy sentencepieceThe first script converts the model to "ggml FP16 format":

python convert-pth-to-ggml.py models/7B/ 1

This should produce models/7B/ggml-model-f16.bin - another 13GB file.

The second script "quantizes the model to 4-bits":

./quantize ./models/7B/ggml-model-f16.bin ./models/7B/ggml-model-q4_0.bin 2

This produces models/7B/ggml-model-q4_0.bin - a 3.9GB file. This is the file we will use to run the model.

Run the model:

./main -m ./models/7B/ggml-model-q4_0.bin \

-t 8 \

-n 128 \

-p 'The first man on the moon was './main --help shows the options. -m is the model. -t is the number of threads to use. -n is the number of tokens to generate. -p is the prompt.

Hello,

Could you explain how to compile and install it under windows?

I’m not a computer specialist but I know a little bit about it, I think it’s great to have your own independent AI and not subject to the censorship of the multinationals, every human being should have their own AI and not only the rich as usual.

Thank you for your help.

Sorry for my English but I use a translator.

Hey Dru, here is the Windows version guide.