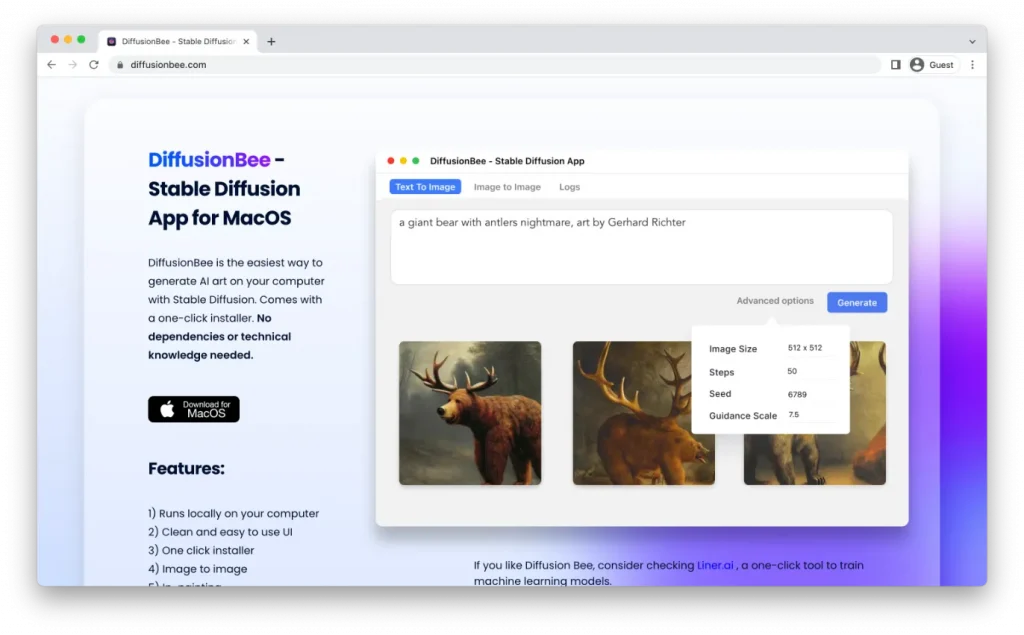

DiffusionBee, created by Divam Gupta is by far the easiest way to get started with Stable Diffusion on Mac. It is a regular MacOS app, so you will not have to use the command line for installation.

While the features started off barebones, Gupta keeps on adding features over time, and there is a growing Discord community.

Requirements

- M1 or M2 Mac

- 8GB RAM minimum, 16GB RAM recommended

- MacOS 12.5.1 or later

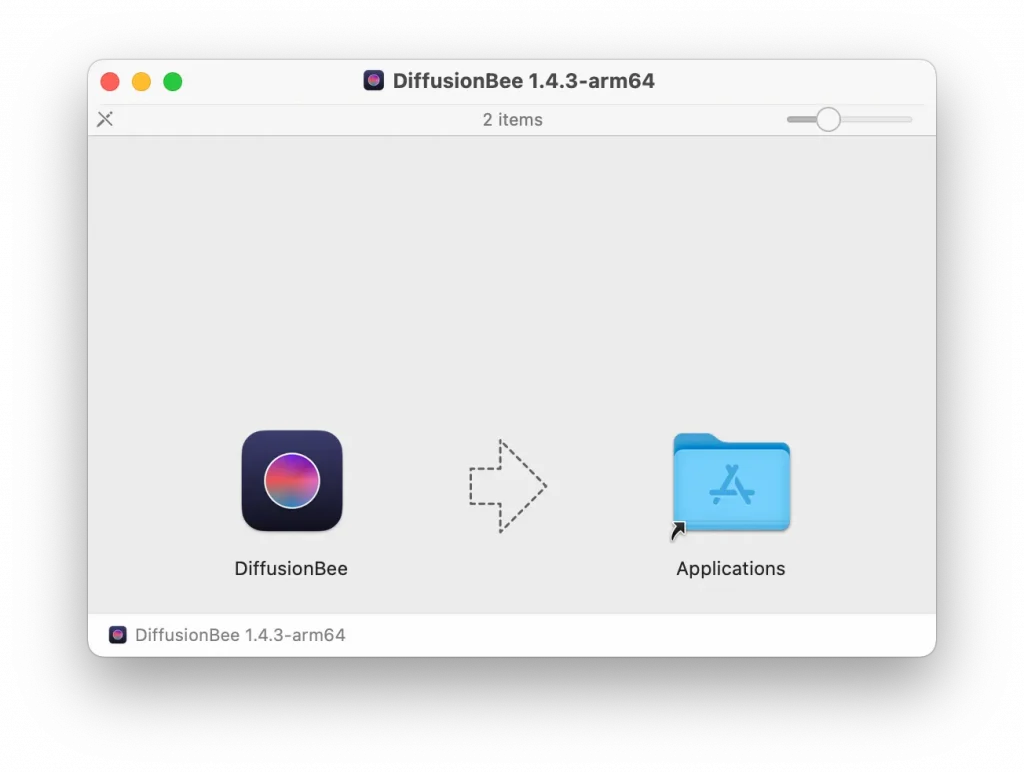

Setup

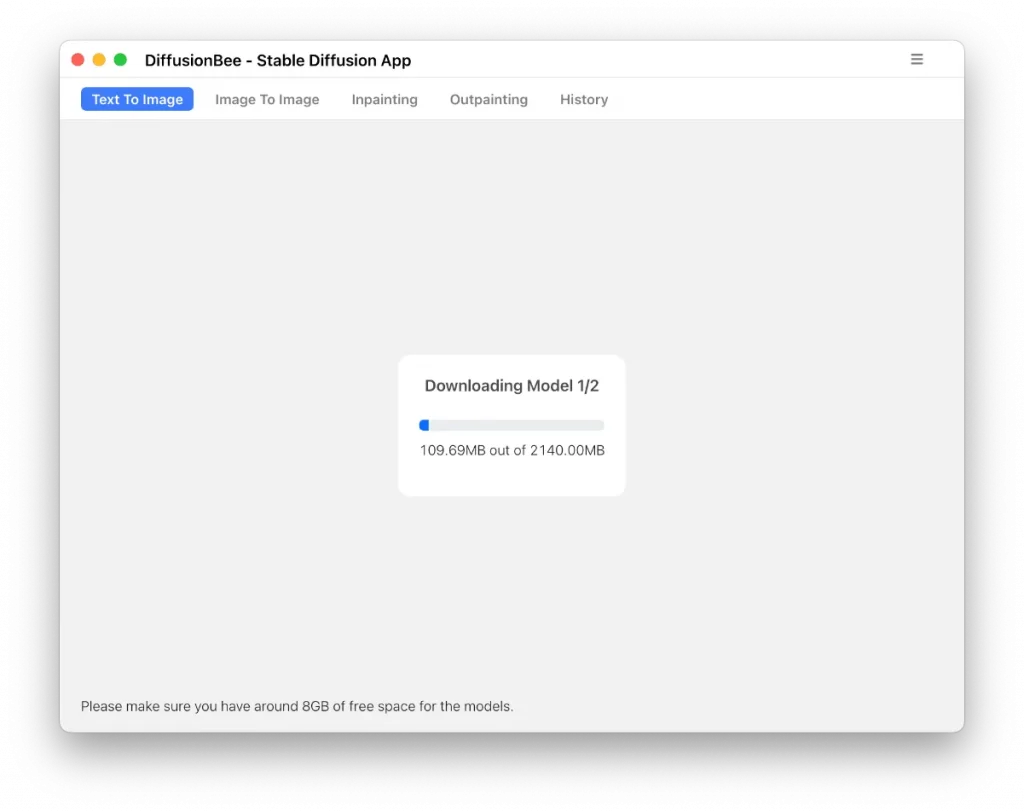

The first time you open DiffusionBee, it will download roughly 8GB of models:

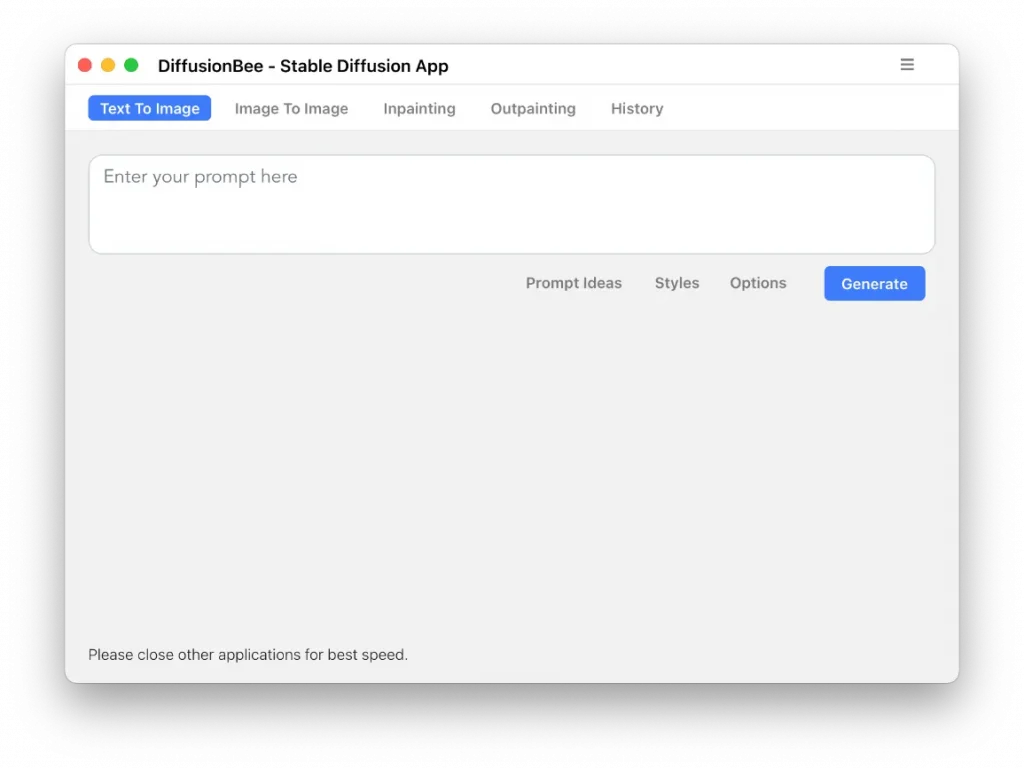

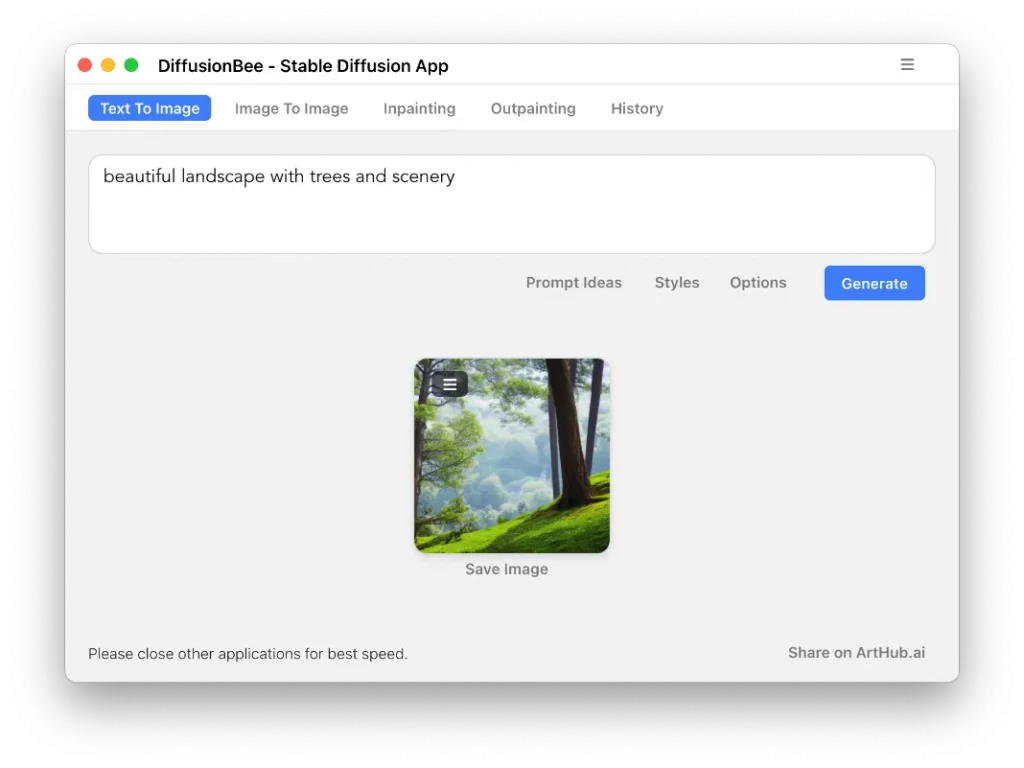

Upon completion, you can begin prompting. Super easy. DiffusionBee reminds you to "Please close other applications for best speed".

Using DiffusionBee

Text To Image

The first time I used DiffusionBee the model took a minute to warm up. Subsequent generations were fairly fast, only taking a few seconds.

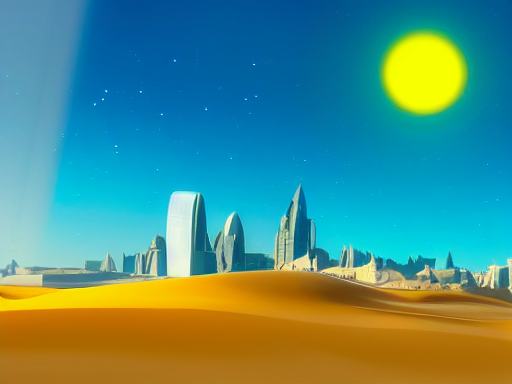

The outputs are what you would expect if you have used Stable Diffusion before:

The interface has helpful style suggestions and you can change the following settings under the options button:

- Num Images

- Image Height

- Image Width

- Steps - Sampling steps are how many cycles the AI will take to generate the image.

- Batch size - How many images in a single generation

- Guidance Scale - Put simply, it lets you specify how strict the AI should follow your prompt. A higher number is more strict, a lower number will create more varied results

- Seed - a random number used as a starting point in the generation.

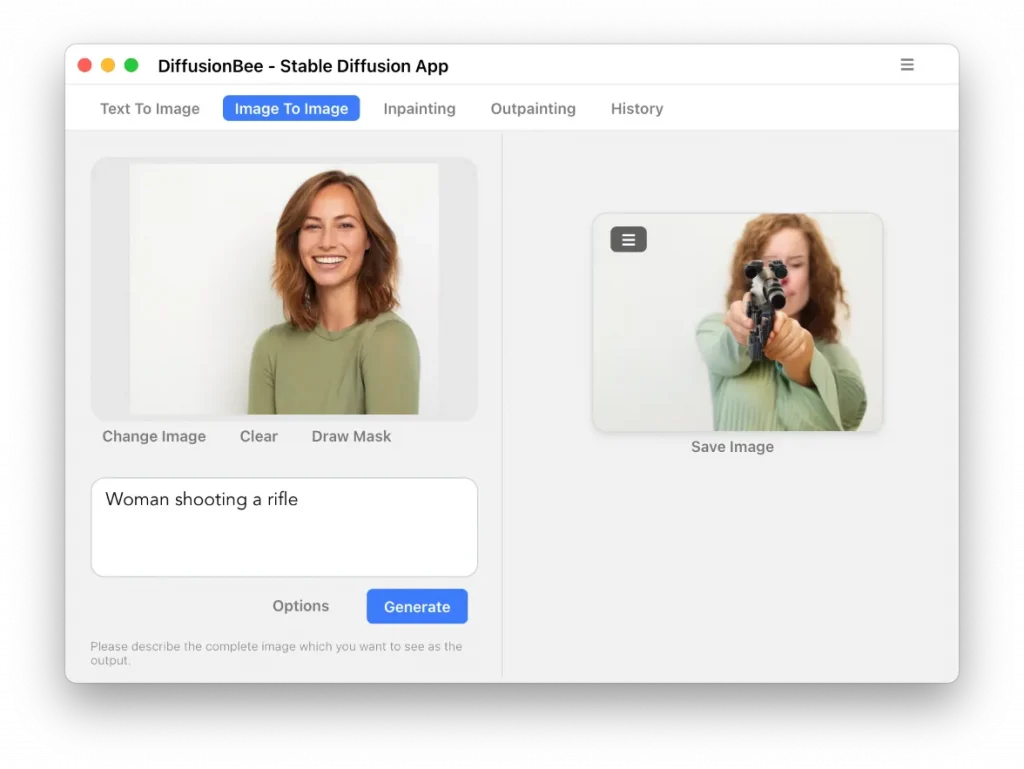

Image To Image

Image To Image lets you do many cool things like increase that quality of your drawings (turn MS paint drawings into worwhaks of art), change the contents of your images, or combine different styles.

With Image to Image generation you can adjust the following options:

- Input Strength - how much the original image influences the prompts, with 0.9 being highly influences and 0.1 being not very influential

- Num Images

- Steps

- Batch size

- Guidance Scale

- Seed

Reddit user argaman123 has posted a fantastic example of Image to Image. I've tried using his exact image and prompt with DiffusionBee to compare the results.

Much improved! Unfortunately I still don't get arganman's dome.

Here are some other ways you can use Image to Image:

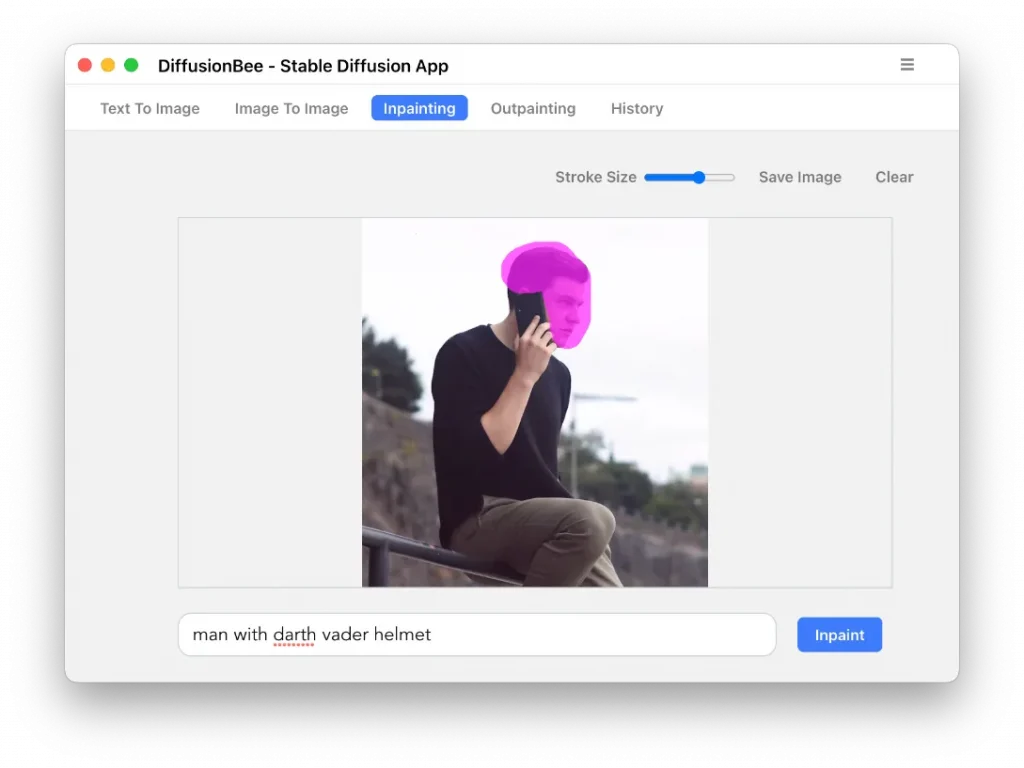

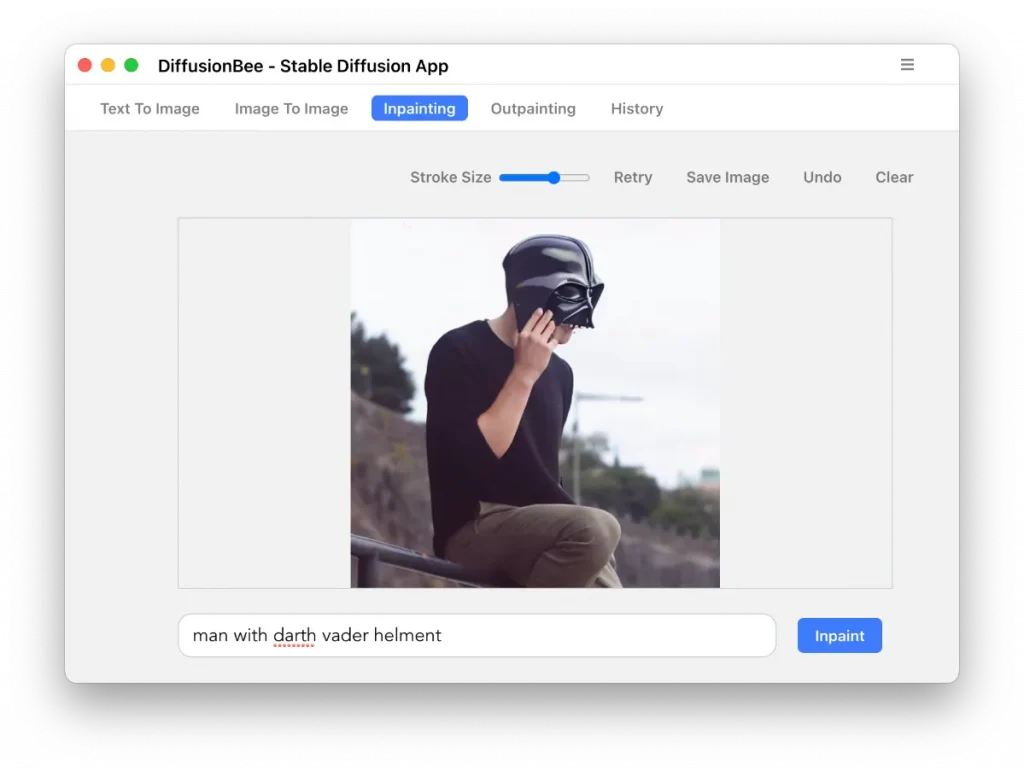

Inpainting

Inpainting lets you paint over an area of the image you want the prompt to apply to. You can use this to change a small part of an image, and add or change details.

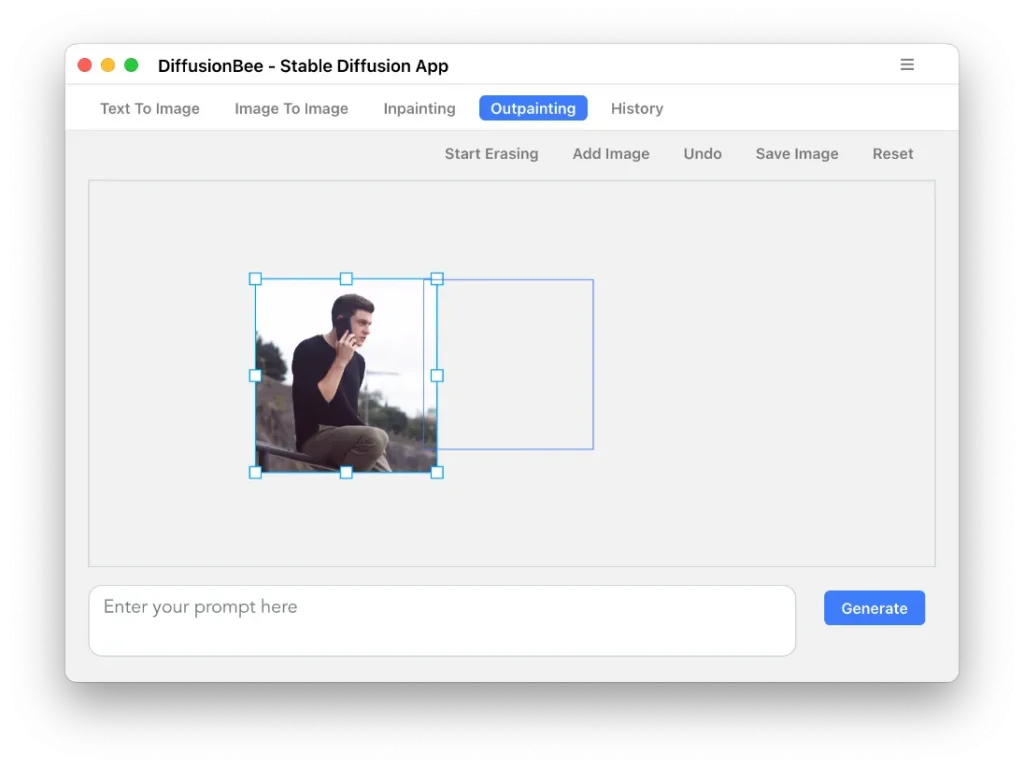

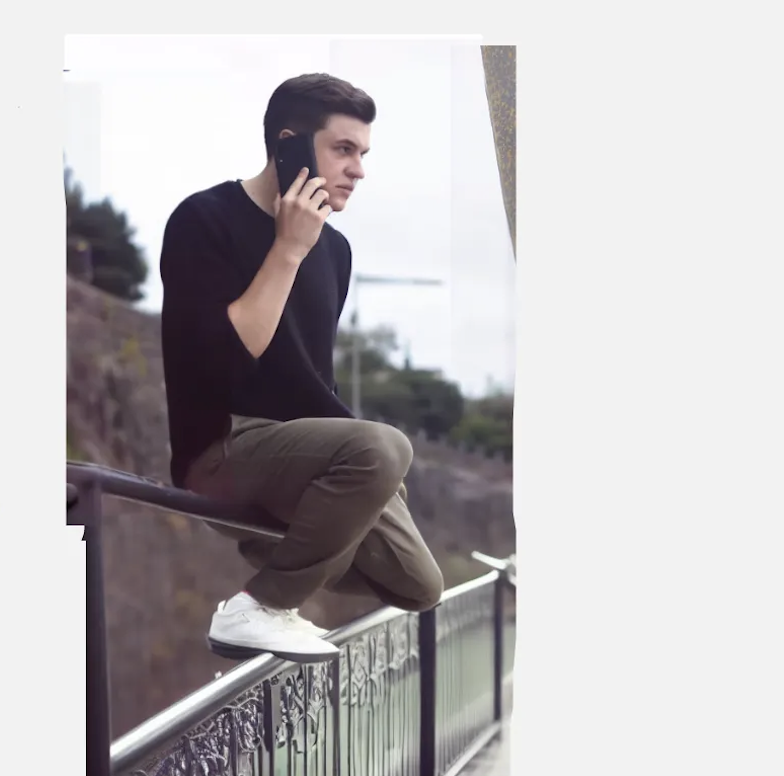

Outpainting

Outpainting lets you add parts to an existing image based on your prompt. The software fills a box which you can reposition around the existing image: even if your original image is not located inside the box, it will still influence the new content.

I find with outpainting it is always best to include a bit of the original image in the painting box.

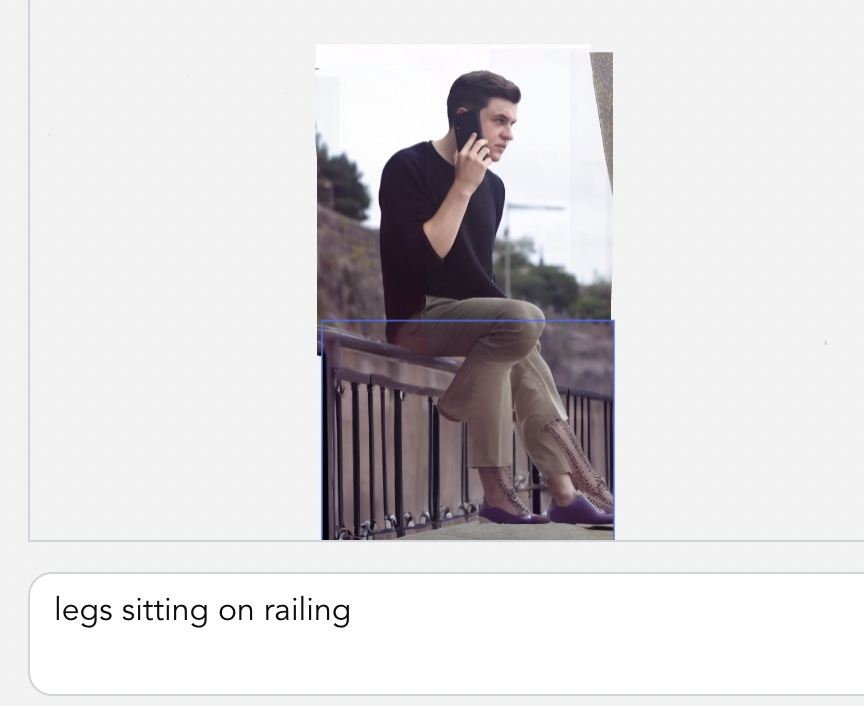

I found myself wondering: should I specify what I want the entire composition to be like, or only the contents of the new box? It really varies and you should test out both. In the above example I prompted to create a "Man sitting on a railing" even though I only needed his legs. Prompting for legs only yielded a much worse result:

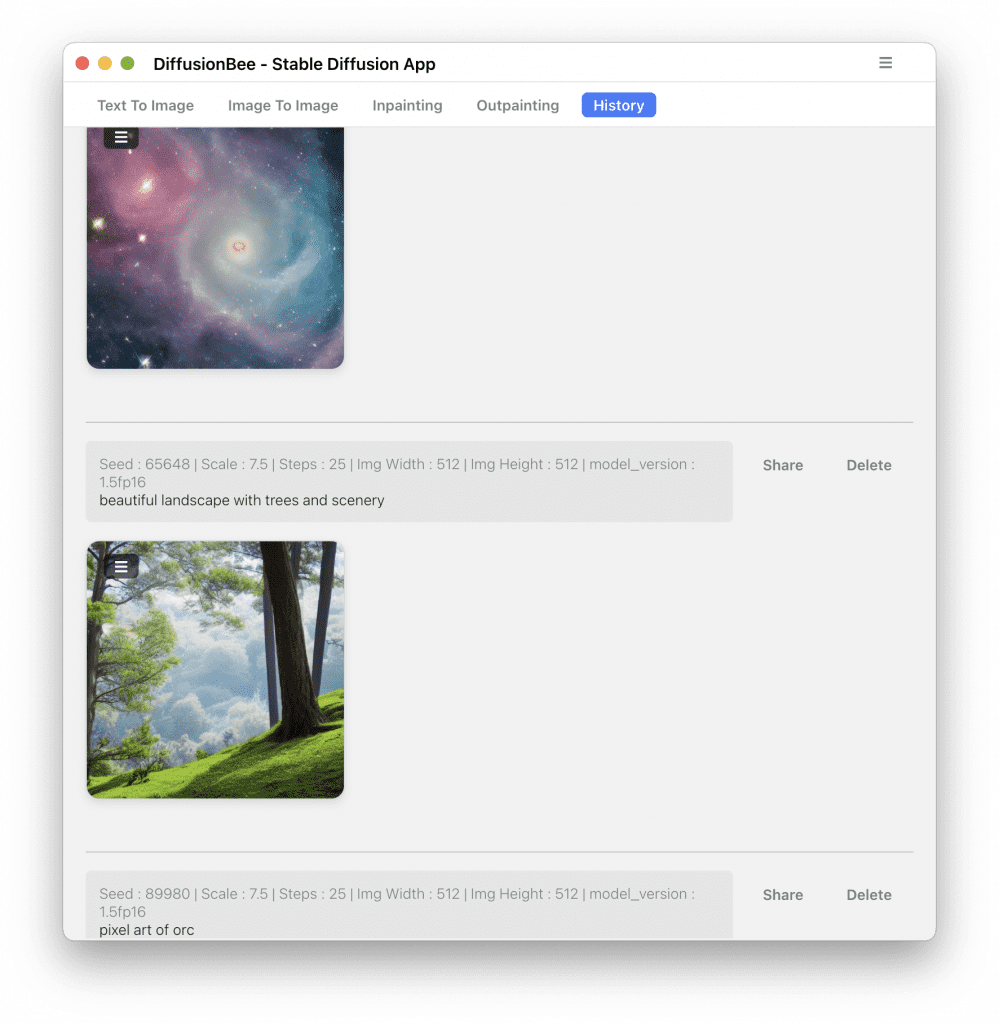

Should note that a nice part about DiffusionBee is it keeps a running record of all your previous generations in the History tab.

Overall, DiffusionBee is a fantastic app to get started with Stable Diffusion on the Mac. While the results are not as robust as with other applications of Stable Diffusion like AUTOMATIC1111 and InvokeAI, it's definitely the most beginner friendly app. I'm impressed how easy to use Gupta made the app; this should be the standard for AI-generation products of the future as the audience moves from tech enthusiasts to the general public.